16 Feb 20266 minute read

As coding agents become collaborative co-workers, orchestration takes center stage

16 Feb 20266 minute read

The consensus emerging from industry is that as AI agents grow more autonomous and collaborative, the role of technical human workers will segue from hands-on implementation to supervising and directing. That trajectory has already surfaced in recent discussions around what agentic coding means for software development jobs, while in its recent agentic coding trends report, Anthropic concluded that engineers will increasingly act as orchestrators rather than implementers.

“Engineers who, only a few years ago, wrote every line of code will increasingly orchestrate long-running systems of agents that handle implementation details so they can focus on

architecture and strategy,” the report notes.

We’re already seeing this shift materialise in product design, as many of the AI tooling companies roll out dedicated surfaces for coordinating and supervising multiple agents in parallel.

Supervising AI agents through Codex

Earlier this month, OpenAI launched a new desktop app for its Codex coding agent, designed to let developers supervise long-running coding tasks, monitor agent activity, and steer execution from a persistent local interface.

“Models are now capable of handling complex, long-running tasks end to end and developers are now orchestrating multiple agents across projects: delegating work, running tasks in parallel, and trusting agents to take on substantial projects that can span hours, days, or weeks,” OpenAI wrote. “The core challenge has shifted from what agents can do to how people can direct, supervise, and collaborate with them at scale – existing IDEs and terminal-based tools are not built to support this way of working.”

Launching initially for macOS, with a waiting list for Windows and Linux, the new desktop app supports parallel agent sessions, persistent task history, and real-time oversight of agent activity, giving developers a centralised surface for coordinating work that previously unfolded across terminals and chat interfaces.

In the days that followed, other companies in the agentic coding space followed suit with similar offerings.

Augment and Warp build for orchestration

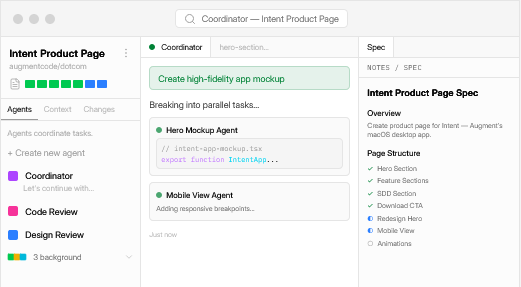

Augment, a San Francisco-based startup building AI-assisted coding tools for software teams, unveiled Intent, dubbed a “workspace for agent orchestration.”

Available initially for macOS, Intent is a desktop workspace designed to coordinate multiple agents in parallel. Developers define the specification, review and approve a proposed plan from a coordinator agent, and then allow specialist agents to execute tasks in isolated git worktrees. A verifier agent checks the results before changes are surfaced.

The system supports Augment’s own agents as well as external agents such as Claude Code and Codex.

Warp, the company behind a developer terminal built for coding with AI agents, also introduced Oz, pitched as an “orchestration platform for cloud agents.”

Oz runs coding agents in persistent cloud environments, allowing teams to launch, monitor and manage multiple agents concurrently. Each run generates an audit trail and session link, and agents can be controlled via CLI or API, giving developers visibility into long-running tasks beyond their local machine.

This means developers can spin up parallel agents to tackle different parts of a project, inspect their progress in real time, step in to adjust instructions if something veers off course, and review a full history of actions once a task completes.

The case for agent orchestration

While each of these companies is approaching the challenge from a different angle, they are responding to the same underlying shift: AI agents are becoming persistent systems that run in parallel, more akin to a small digital workforce than a single assistant waiting for prompts.

As agents take on longer-running, multi-step tasks, developers need ways to coordinate them, monitor progress, intervene when necessary, and maintain a clear record of what has been executed. Managing one agent is a different task from managing many operating simultaneously across a codebase.

And this is what we’re now seeing emerge – a layer of tooling built to support that reality. As coding agents become collaborators, structured oversight is essential, providing visibility, control and accountability.

Join Our Newsletter

Be the first to hear about events, news and product updates from AI Native Dev.