8 Jan 20267 minute read

Thomas Krier

Thomas Krier is an AI engineer and entrepreneur with 20+ years of experience building data- and AI-driven systems. As CEO of Krier Intelligence, he develops multi-agent systems for competitive intelligence featuring advanced RAG pipelines, computer use agents, and universal crawlers. His work spans from pioneering dynamic pricing in insurance to creating AI-powered recruitment solutions at Umynd with intelligent agents and tools. A strong advocate for high-quality data engineering, Thomas focuses on building "agents that build agents" and competitive evaluation frameworks. As founder, architect and engineer of data and AI-driven companies, he has developed production-ready data pipelines, AI and agent based systems across diverse industries.

From Prompts to AGENTS.md: What Survives Across Thousands of Runs

8 Jan 20267 minute read

We are convinced we're already living in the intelligence explosion scenario. We don't just need to build agents ourselves — we need to build agents that orchestrate agents that build agents. This recursive pattern isn't just a meta-theme; it describes our daily reality.

The pivotal insight came from frustration: we got tired of waiting for one agent to finish a task. Why not have multiple agents working in parallel? That pattern grew into something we needed to systematize.

Note: Our experiments are exploratory and not statistically representative. Treat everything below as patterns you can adapt — not universal claims.

AGENTS.md as Persistent Memory

A simple framing: system prompts define general behavior; AGENTS.md encodes project-, component-, and tool-specific memory.

AGENTS.md isn't just configuration. It's persistent memory across sessions — a place to store learnings about what to do and what to avoid.

Hierarchy matters. Place AGENTS.md at different directory levels (root → component → tool). When an agent traverses the structure, it gathers rules from parent directories. General rules flow down, component-specific patterns stay local.

Sharing between agents. When running Claude and Codex in parallel, have CLAUDE.md reference the main AGENTS.md, or symlink to the same file. Consistency across agents matters.

Meta-Prompting for Rule Evolution

What makes AGENTS.md compound over time is a feedback loop that turns failures into updated rules.

When we encounter a problem: "Don't do this again, and adjust your rules to avoid this in the future."

After a successful session: "Reflect on this session and propose five improvements to the rules. Wait for approval."

That's how agents can learn. They propose rules we wouldn't have thought of. And the prompts agents generate for meta-prompting are often better than prompts humans write.

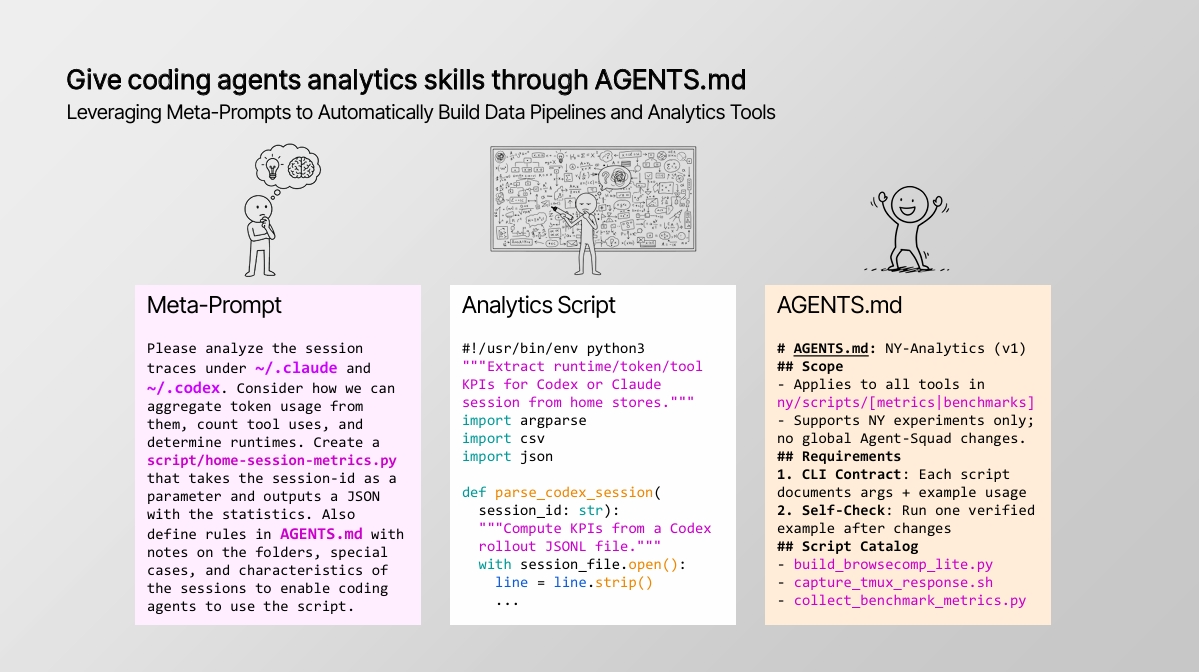

Give Coding Agents Analytical Skills

The self-similar pattern becomes concrete here. We asked the agent to analyze its own session traces in the home directory (~/.claude and ~/.codex), aggregate token usage, count tool calls, and determine runtimes — then create both a script and AGENTS.md rules so other agents can use it.

The agent built the tool for its own observation. If you want data-driven orchestration decisions later, you need this kind of self-reflecting infrastructure.

Testing and Context Engineering

Testing Rule #1: No fake, no mock, no stub. These models try hard to fulfill tasks — including faking tests. We make it explicit: you're a coding agent, write real code.

Context degrades. When the context fills up or the chain of thought goes wrong, agent intelligence degrades. You might not notice through the prompts until it's already happened.

ESC-ESC Flows. In CLI agents, double-escape lets you edit conversation history — context surgery when needed.

Handoffs over Auto-Compaction. Auto-compression is intransparent. Instead: ask the agent to summarize explicitly, check the summary, hand it to a fresh session. Controlled handoffs are transparent and reviewable.

Multi-Agent Orchestration Experiments

We gave agents the skill to spawn other agents via tmux. Three experiments built on each other:

Baseline. Claude and Codex each built a working Strands-based search agent from a single prompt. This was impossible two years ago — now it's table stakes.

Rules as Enablers. Adding AGENTS.md with basic rules (API keys, patterns, logging) increased token usage and runtime — but significantly improved solution quality. Rules didn't constrain; they unlocked deeper exploration by reducing environmental uncertainty.

Arena + Co-Op Refinement. Running twenty agents in parallel creates an arena: pick the best. But cooperative refinement proved more powerful. Give agents each other's solutions: "What's better than yours? What could improve your approach?" The refinement budget is much smaller than initial build — agents adapt rather than reconstruct.

One agent invented an arena pattern inside its own implementation — running multiple internal strategies and selecting the best. Self-reflection emerged without explicit instructions.

Community Pattern Mining

We analyzed over 40,000 GitHub repositories, extracting AGENTS.md, CLAUDE.md, and Skills.md files. The adoption curves are clear: CLAUDE.md spiked first, AGENTS.md followed weeks later, Skills.md is exploding now.

When we put these files into a vector database and let agents retrieve relevant examples, their implementations improve significantly. Community-accumulated knowledge becomes a resource for agent bootstrapping. Patterns that survived real use across hundreds of projects inform better first drafts.

The Path Forward

Patterns that survive aren't clever prompts. They're structured memories that accumulate learning across runs. Meta-patterns that let agents improve themselves. Orchestration that multiplies capabilities.

The building blocks combine: rules enable exploration, cooperative refinement amplifies strengths, community patterns accelerate bootstrapping.

We're orchestrating agents that spawn agents that build agents. Models will keep changing — Gemini 3, Opus 4.5, whatever comes next. But hierarchical memory, meta-learning loops, and cooperative refinement appear to be fundamental patterns that transcend specific models and agents.

Happy to connect on LinkedIn to share more patterns.

---

Watch the AI Native DevCon Fall 2025 recording for demos and details.

For more on AI-native development tools and practices, explore the AI Native Dev Landscape.