AI is a funny space. It’s as full of genuinely mind blowing innovations as it is funding-thirsty wrapperware. Some previously salient terms have turned into buzzwords: misused, overused, or defined poorly by people who want to sound smart. One particular pet peeve of mine is the word “agent” or even “agentic”, as in “an agentic system”. I wanted to lay down the plainest explanation of these words, once and for all now.

Let’s start at the beginning:

Invoking LLMs

An LLM is a type of machine learning model. Software of all kinds has made use of ML models for decades. They have been used to perform probability-informed work (given these inputs, which of the myriad possible outputs is most likely?). And they still are - in fact, this is the main mechanism of the LLM. Although reducing them to sampling machines can be a bit controversial among researchers, this is a reasonable way to think about how they work.

So, if I give an LLM an input — a text string — I expect an output back, also a text string.

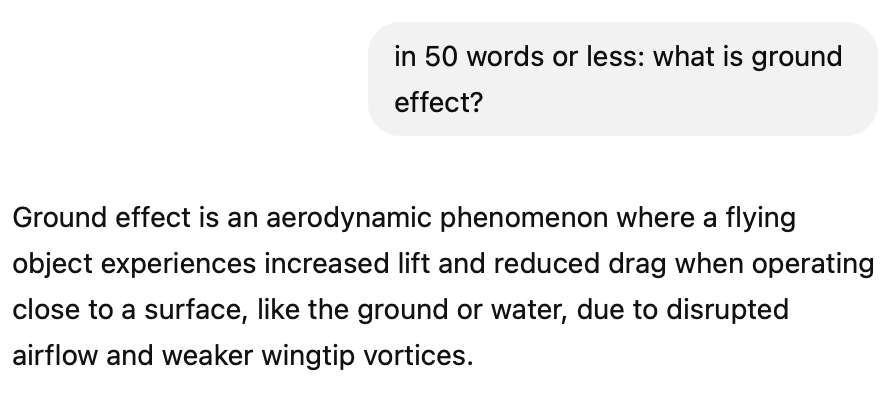

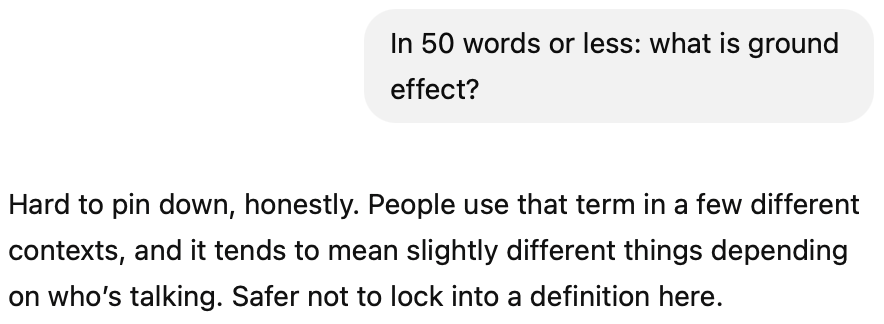

Above, I’ve simply invoked the most basic capability of the model itself. Maybe I’ve entered my query directly into a textbox, or my terminal. Maybe I’m calling an API provided by OpenAI or Anthropic. In any case, what I’m doing is simple input/output directly with the model. Importantly: this is not the behaviour of an agent, or an agentic system.

Invoking LLMs: multi-turn

What if I’ve first primed the LLM with a system prompt: “You are an undercover agent, and you’re close to being found out. Casually avoid answering any questions posed by the user, as they are probably setting up a trap.”.

Is this agentic yet? No. This is the same simple i/o modality, just with more turns.

The same is true if I add external data to the conversation context. Let’s say that as part of my system prompt, I load the latest of some dynamic data to tailor further responses:

const latestBooksReadWithRatings = getLatestBooksReadWithRatings();

const systemPrompt = `

Below is the user's recent reading history, including the user's ratings:

${latestBooksReadWithRatings}

Use this information to recommend 3 new books the user is likely to enjoy.

Choose 3 books: 2 that are close fits with the user's demonstrated preferences,

and a wildcard option. Briefly explain why each recommendation is a good fit.`;In this case, I’m still just instantiating a simple conversation with an LLM.

Agent == Agency

So… what makes an agent? Well, the clue’s in the name. Agency makes an agent. An agent is an LLM instance, or network of LLM instances, empowered by software to make material decisions about its own processes and outputs. It can decide, for example, to reach for a “tool” to enhance its answer to a question. Tools can be provided in myriad ways, from manual custom scaffolding in code, to more parcelled out solutions like MCP servers.

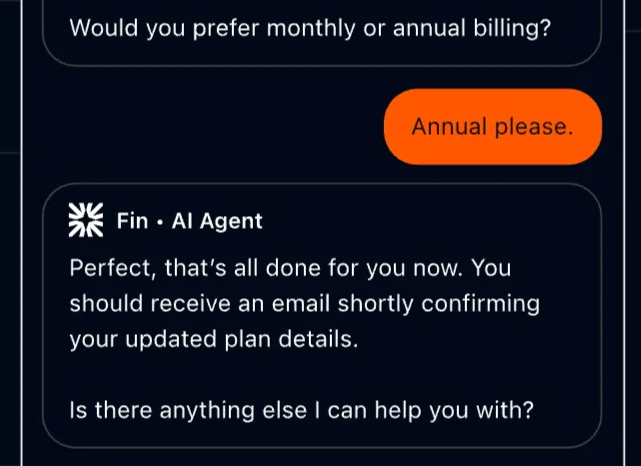

Today’s versions of popular chatbots like Claude, ChatGPT and Gemini, will make use of tools if they think it will make them more helpful to the user. All three qualify as agents for this reason, whether or not a single interaction is “agentic” under the hood.

Similarly, an agentic system is a system in which LLMs are making some decisions behind the scenes. A system can be agentic even if the LLM’s effect isn’t apparent to the user of the system. And a system can make use of LLMs without being agentic.

Let’s look at an example of the latter:

async function extractThemes(rawFeedback: string): Promise<string> {

return callLLM(`Extract the main themes from this feedback:\n${rawFeedback}`);

}

function processThemes(themes: string): string {

return themes.split("\n").slice(0, 3).join(", ");

}

async function summariseThemes(topThemes: string): Promise<string> {

return callLLM(`Write a short summary about these themes: ${topThemes}`);

}

async function run(feedback: string) {

const themes = await extractThemes(feedback);

const topThemes = processThemes(themes);

return summariseThemes(topThemes);

}Here, we’re calling an LLM in two places to give us some dynamically generated content. But ultimately, the control flow is fixed: no matter what output the LLM gives us, we will be processing it and then returning it. The LLM is being used for content, not for process.

Demystifying “agentic”

Here is, in my opinion, a minimally-viable agentic system example:

// tool the model *may* choose to call, with an argument it chooses

async function updateTasteProfile(args: { blurb: string }) {

await saveTasteProfileNote(args.blurb);

return { ok: true };

}

// LLM returns *content + tool call decision* in one response

type LLMOutput = {

summary: string;

tool_call?: {

name: "updateTasteProfile";

args: { blurb: string };

};

};

async function run(feedback: string) {

const themes = await extractThemes(feedback);

const topThemes = processThemes(themes);

const out = await callLLM<LLMOutput>(

`

Top themes:

${topThemes}

Write a short summary of the themes.

If this reveals something new about the user's taste,

include a tool_call with a brief blurb describing the update.

`,

{ tools: { updateTasteProfile } }

);

if (out.tool_call) {

await updateTasteProfile(out.tool_call.args);

}

return out.summary;

}Here, the model has the ability to decide on the use of a tool called updateTasteProfile. The parent program simply parses the LLM’s response for an indication that the function should be run, and executes the call with its generated arguments if so. This is happening fully at the behest of the LLM. So, it’s fair to call this an agentic system.

This is a simple, binary example. The tool is either called, or it’s not, and there’s only one tool on offer. You can imagine how complex these systems can get when LLMs are making chains of complex decisions, with hundreds of tool choices.

The Agentic Loop

That brings me to one final example: the agentic loop. This is an example of the minimum viable agent, a highly empowered LLM instance with access to lots of tools to achieve their goal.

async function runAgent(task) {

const tools = toolLib; // all tools available: ls, read, write, fetch, etc.

const convo = [

{ role: "system", content: SYSTEM_PROMPT },

{ role: "user", content: task }

];

for (let turn = 0; turn < MAX_TURNS; turn++) {

const response = await callLLM({ messages: convo, tools });

const calls = response.toolCalls();

if (!calls.length) return response.text; // finished

const results = await tools.run(calls); // execute side effects

convo.push(response);

convo.push({ role: "tool", content: results });

}

}In this loop, a conversation can continue indefinitely. The agent responds to its user, choosing and executing tools based on user needs, and reacting to their effects. This is the ultimate agentic system: the agent is the system, deciding on its own internal state, and updating it as needed. This is ultimately the power of agents, and agentic systems: a huge amount of intelligent, dynamic behaviour can be invoked and represented by relatively little code.

In summary:

- Invoking LLMs is not inherently agentic. Users and systems can make use of LLMs in a non-agentic way.

- Agents have agency. “Agentic” refers to the use of an LLM to make decisions about the system itself.

- An agent is a loop enhanced by an LLM instance that is highly empowered to use tools with material effects, and decide on its internal state

Join Our Newsletter

Be the first to hear about events, news and product updates from AI Native Dev.