13 Feb 202610 minute read

Three Context Eval Methodologies at Tessl - Skill Review, Task and Repo evals

13 Feb 202610 minute read

Evals at Tessl: version your context, then prove it works

Context management is the name of the game when working with agents. Once you hand an agent the right context in the right format, it can do surprisingly competent work without much intervention.

The problem is that “the right context” is usually assembled in a very adhoc way: Someone thinks of a useful heuristic for an agent and writes it in a markdown file, someone else writes a quick rule list, a third person pastes some internal notes in their AGENTS.md.

Over time, you end up with a disparate pile of context snippets between teams and teammates. Those snippets are… probably helpful? Possibly conflicting with other context in your environment? Out of date? Actively un-helpful…? It’s hard to know, and harder to keep track.

Tessl is built for that world: version and structure the context you give agents, and then check whether it actually does what you think it does. Let’s deep dive into the checking part.

Tessl’s three types of evals

There are three evaluation systems in the Tessl CLI, which each map to different questions.

- Skill review: how structurally sound is my

SKILL.md, does it comply to the standards ? - Task evals: does the context improve the agent behaviour & output on a given task?

- Repo evals: if I install this context in a real repository, how do agents use it to handle real changes? (beta access)

Below, we examine them one by one.

Skill review: is your SKILL.md (https://agentskills.io/) structurally sound?

This first evaluation checks the contents of your SKILL.md rather than running the agent on an external task.

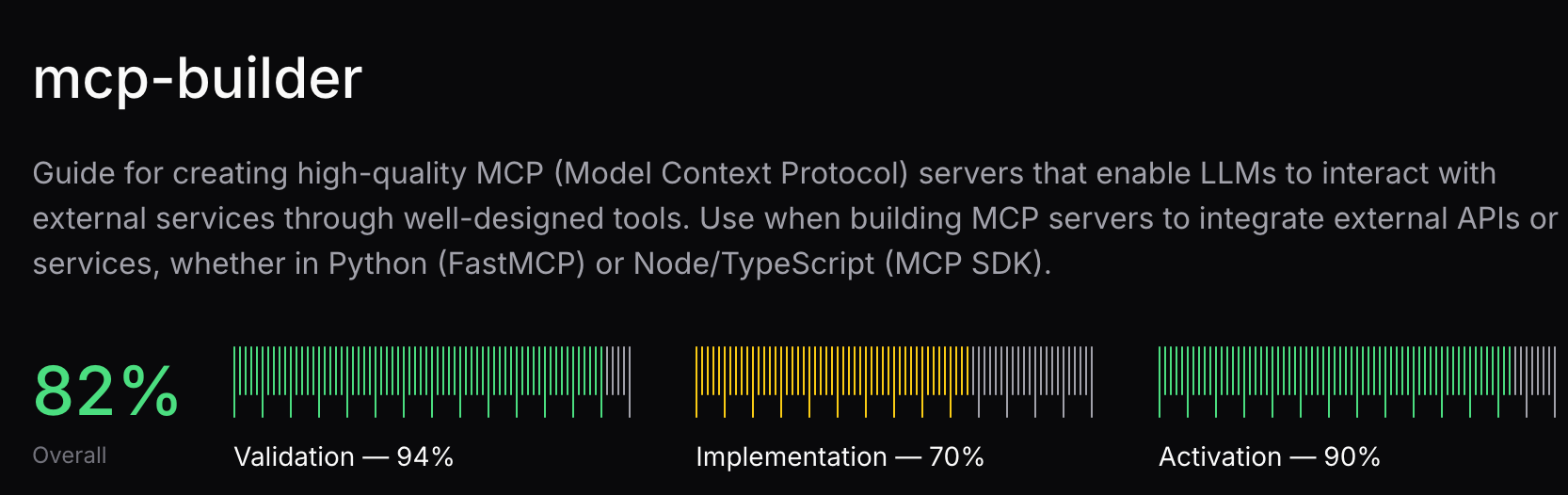

If you look at a skill’s review page in the Tessl Registry, you’ll see a breakdown of several score categories.

- Validation: Does the frontmatter parse correctly? Are required fields present? Are sections laid out correctly? This is about schema hygiene, and minimum requirements.

- Implementation quality: Are instructions clear? Are examples concrete? Is the guidance actionable rather than vague?

- Activation quality: Are triggers obvious? Is scope clearly defined? Does the description make the skill discoverable?

- Overall score: A combined view of the above, useful for quick comparison but not a substitute for reading the breakdown.

Here’s an example of a reviewed skill:

The criteria here are aligned with documented best practices for writing skills (including the public guidance Anthropic has published around agent-oriented tool/skill prompting and clear instruction design). The point is straightforward: the clearer and more constrained the skill is, the more predictable the agent tends to be when it uses it.

It’s also worth saying out loud that this part is closer to “linting and review” than a true eval. As LLM capabilities change, or perhaps as the agent skill protocol evolves, the bar for what counts as “clear enough” will shift, and we expect the validation and scoring criteria to evolve over time.

Task evals: does this context improve behaviour?

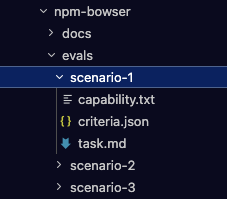

Task evals don't just look at the context files but actually have the agents use them to accomplish a set of scenarios. Each scenario includes a task (task.md) and scoring criteria (rubric)(criteria.json).

The rubric is a weighted checklist. We keep it simple on purpose. Simple rubrics are readable, debuggable, and stable. When scoring feels “mysterious,” it becomes hard to trust the result, and even harder to know what to fix.

At Tessl we package context into Tiles and this makes it easy to install extra context in a version controlled way from our registry. We use that to make the testing reproducible.

Each scenario runs twice:

- baseline: the agent solves the task without the extra context installed

- with tile: the agent solves the task with the tile’s skills/docs/rules available

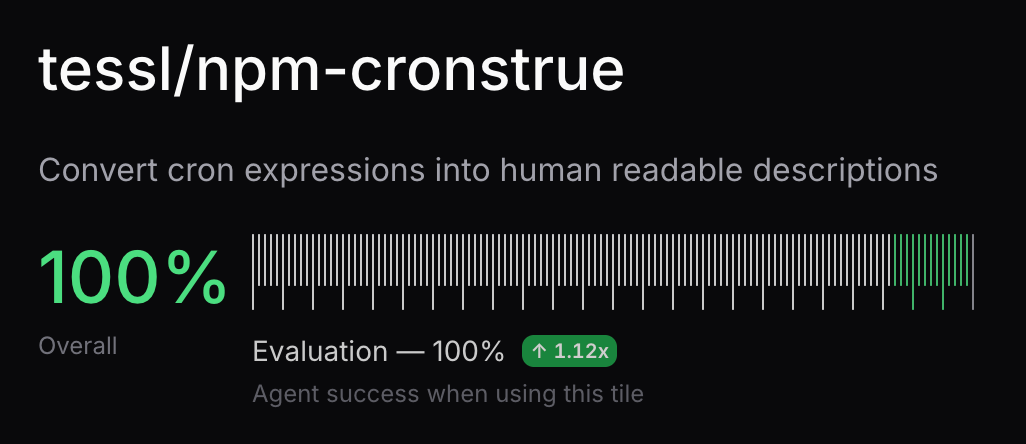

We then compare the agents performance on the scenarios we laid out in both conditions, giving you a result that looks (hopefully) something like this:

The useful part is the comparison between those two runs with everything else held constant.

Mechanically, each run happens in an isolated container. The agent produces the artifacts the task calls for (code, tests, config, whatever makes sense), and then a judge model scores the output against the rubric and explains the scoring.

That gives you per-criterion scores, written reasoning, totals, and a baseline vs with-tile comparison.

Keep in mind: a task eval doesn’t inspect the actual contents of the context. It only measures the effect the context has on agent behaviour in pre-defined scenarios. If the context is beautifully written, but doesn’t change outcomes in practice, you’ll see that reflected in a task eval.

Repo evals: what a “scenario derived from a commit” looks like

Repo evals are different still : They also use scenarios, but the scenarios not only involved the context and the agent but also an actual real world repository.

Here’s a concrete example. Imagine you have a repo with an API client library. Somebody makes a commit that updates the client to handle a new pagination format and touches three files:

- client.ts (pagination logic updated)

- types.ts (new response type)

- README.md (http://README.md) (example updated)

Now suppose you’ve written context that contains your internal conventions for this client library: preferred patterns, the right helper functions, and the “please don’t reimplement pagination by hand” rule, possibly bundled with related skills or documentation.

A repo eval takes a change like that and builds an eval scenario around it. At a high level, the scenario looks like:

- Start from the pre-commit repo state.

- Describe the change request in a way the agent can act on (often by using the diff as grounding and turning it into a clear instruction like: “Update pagination handling to match the new response shape; update types and docs accordingly.”).

- Run the agent twice in an isolated container:

- once without the repo tile available

- once with the repo tile installed

- Score the result with a rubric that checks for the kinds of things you care about in real repos: correctness, minimal unnecessary churn, and whether the agent followed your established patterns (this is where concepts like “abstraction adherence” show up — meaning it used your library’s intended abstractions instead of bypassing or re-creating them).

- Compare the two outputs so you can see whether the tile improved the outcome on that real change.

So you’re not “evaluating a repo” in the abstract, and you’re not “evaluating a diff” as a static object. You’re evaluating how the agent performs on a realistic change request that is grounded in actual repo history, and you’re testing whether the tile improves that performance.

This is useful because tiles can look great in isolated examples and still fail to help on real change patterns in a live codebase.

About variance (without getting too research-y about it)

In most cases, each eval scenario is executed once for baseline and once with the tile. LLMs and agent processes are non-deterministic, so we know that outcomes can vary from run to run.

We try to keep this practical by running multiple scenarios and looking at the overall pattern rather than reading too much into one outlier. Small negative differences often come down to normal model variability. Larger or consistent negative differences are usually a sign that the tile is confusing, contradictory, or pushing the agent toward unhelpful behaviour.

Why evaluation matters in practice

If you stop at “we have some context,” context management turns into a pile of unverified artefacts. People tweak prompts, adjust markdown, and trade tips, but it stays hard to tell whether anything is improving.

Evals give you a way to anchor this in something concrete. You can version the context you hand to an agent, run repeatable scenarios, and see whether the changes actually shift behaviour in the direction you want. That turns context into something you can maintain intentionally instead of something you accumulate accidentally.

Get started evaluating your context with Tessl today at tessl.io/registry.

Join Our Newsletter

Be the first to hear about events, news and product updates from AI Native Dev.