AI-powered products seem to come in different flavors. Some are big and dramatic, talking about reimagining the world and a bold new future. These solutions are exciting - and potentially frightening - but they also feel quite far away. Others are smaller and pragmatic, typically focusing on productivity. They promise to make you twice as fast and take away boring and repetitive tasks, making them compelling to adopt - but more incremental than revolutionary.

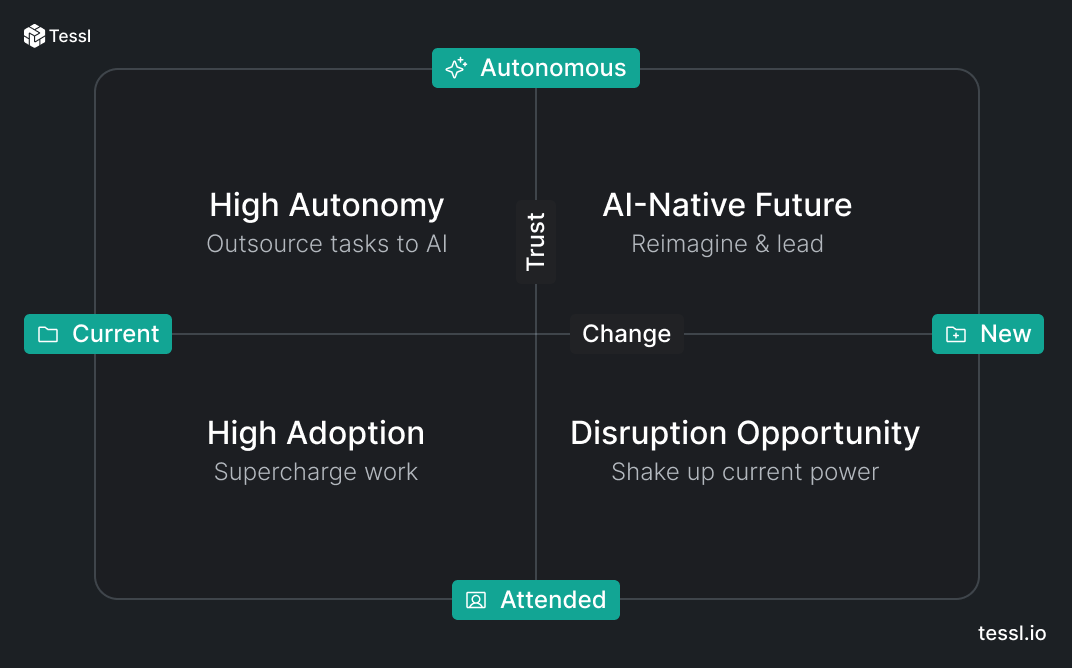

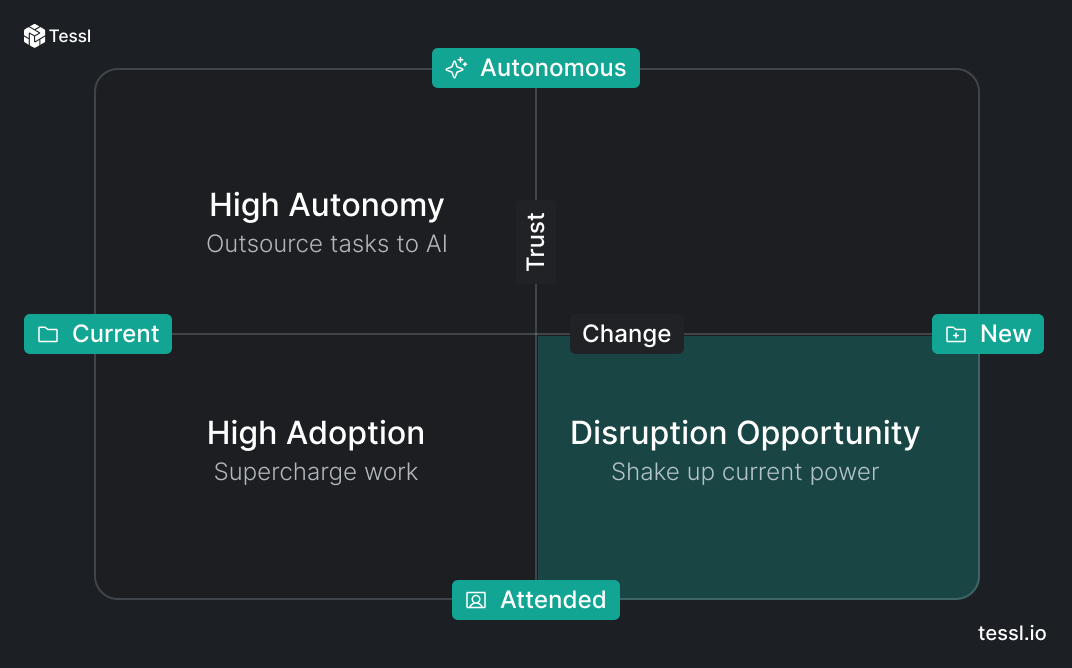

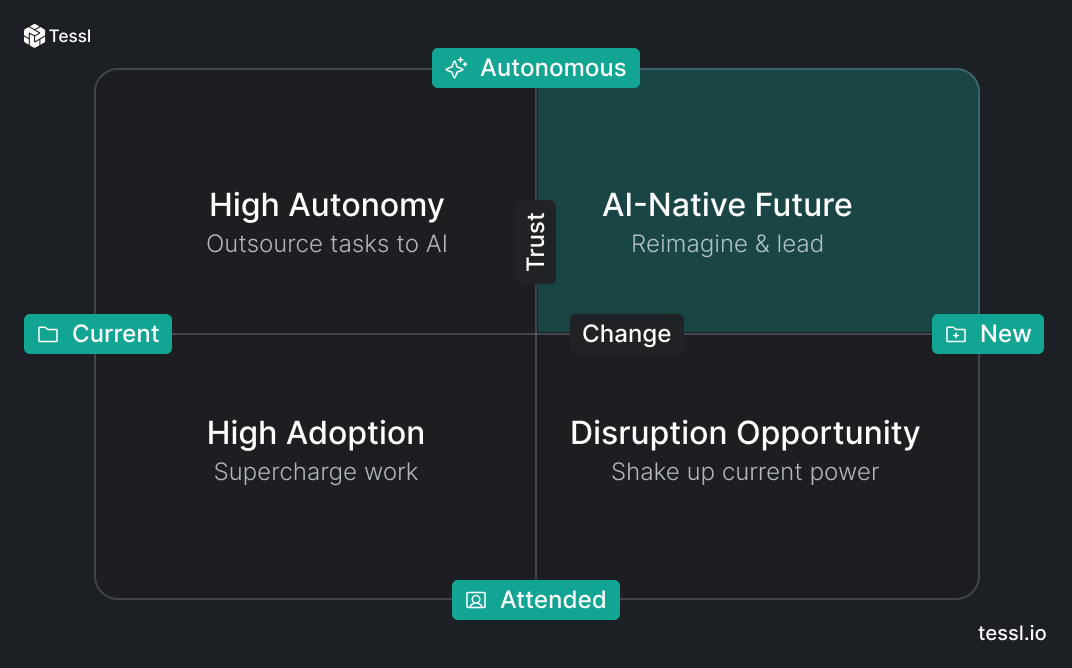

To make sense of this wide range when looking to build, invest in or use an AI tool, I find it helpful to plot AI offerings across two dimensions: Change and Trust.

- Trust indicates how much one needs to trust the product to “get it right” for it to be helpful. It ranges from smaller tasks you can easily verify (attended workflows) to tasks that need to get it right on their own to be valuable (autonomous workflows).

- Change indicates how much you need to change the way you work to use this product. It moves from products that integrate seamlessly into your current day to day (existing workflows) to those introducing a new way of doing things, requiring your processes to change (new workflows).

Drawing those creates a 2x2 map to place solutions and their journeys within. Each of its quadrants represents different opportunities for AI-powered solutions, and can help shape one’s strategy in building and adopting such.

High Adoption: Supercharge work today

Tools on the bottom left quadrant are the easiest ones to adopt, since their adoption triggers very little friction. They fit into your existing ways of working, so it’s easy to try them out, and their results are easily verified, so there’s little risk of doing so. They still need to work well and be helpful for you to keep using them, but there’s little reason not to try.

Code completion tools, such as GitHub CoPilot, Gemini Code Assist or Tabnine, are good examples of this. You’re already writing code in your IDE, and already used to simple completions, so these tools simply make those completions longer - little change. In addition, the completions are small enough that you can “eyeball” them and confirm they’re reasonable before accepting. If a high enough percentage of the proposals is correct, such tools can become great productivity boosters.

Enterprise natural language search, such as Glean or Realm, is another example. Users are already used to searching, and are used to natural language search from the likes of Google - all familiar workflows. Once the search is performed, users naturally know if their question was answered, and can use source links to verify correctness, so little trust is needed to start. As long as the search works well often enough, users can find what they’re looking for faster, with less training and across bigger datasets.

These are just a few examples of many AI tools that can help supercharge your work today. Fathom’s note taking, Genie’s contract redlining, Snyk’s automated vulnerability code fixes and Ambience’s medical scribe are a few more, all AI powered. They don’t look to change the way you work, and leave the driving to you, so if they’re accurate enough, they can quickly become part of the way you work. This ease of adoption also makes this the most crowded quadrant, and a likely domain for AI features from incumbents who already control the current workflow.

High Autonomy: Outsource Tasks to AI

Moving up the Trust axis, we find solutions that are only valuable if the user trusts the AI to ”get it right” autonomously. If a task can’t afford to wait for a human to verify, or if it’s too big in scope to go back and forth with the AI on, it is only valuable if you can trust the AI to get it right. This is especially true if the cost of a mistake is substantial.

This type of AI usage is similar in nature to outsourcing, as you’re passing on a defined task to another entity and are expecting good results. Since we’re still looking at “Existing workflows”, it’s easier to adopt such capabilities for tasks you’re outsourcing anyway, especially if you’re outsourcing them for scale (as opposed to expertise).

A good quadrant representative is Fin, Intercom’s autonomous support rep. Support often follows a systematic workflow, which AI can mimic, and client interaction is typically over chat or email, where you can insert AI with no change. However, a bad customer interaction can be very costly, and having humans verify each answer will cancel the value, so using Fin requires customers to truly trust it’ll get it right. Intercom’s co-founder explained more on our podcast. Parcha’s KYB AI Agent similarly automates an often-outsourced task, as does Cognition’s auto resolution of software bugs - though its code still requires reviewing today.

Robotaxis (self driving taxis) belong in this quadrant too. For consumers, they require very little change in behavior - order a taxi via the app, get in when it arrives, get off at your destination. The change is so small that Uber is literally using the same app for it! However, if AI is doing the driving, you absolutely need to trust it will do it right - your life depends on it.

These examples and others require little change to your process, at least to get started, so they’re easy to adopt. Unlike their neighbors from the quadrant below, though, if they don’t get it right in the vast majority of cases, they’re no good to you.

Disruption Opportunity: Shake up current powers

The bottom right quadrant is where the true change occurs. It captures solutions that introduce new ways of tackling problems that have become feasible with the advent of GenAI, and carry the potential of being substantially better. Adopting new practices requires humans to change, which is a slow process… but if successful, such new workflows can shake up an industry, as they lean on new capabilities, and since incumbents are often reluctant to adopt them.

GenAI’s impact on the creative world falls into this quadrant. Creating a Midjourney photo or Suno song with a text prompt is easier, faster, cheaper and more accessible than using brushes or playing a guitar. However, it requires creators with different skills and brand new workflows, as well as having society accept it and lawmakers to reexamine intellectual property rights. The fact a human needs to approve the creation before release isn’t the hurdle, so it’s not about trusting it gets it right - it’s about changing the way we create.

Synthesia demonstrates this clearly. It lets you create training, sales, social and other videos using AI avatars and text - cheaper, faster and more accessible than physical production, but requiring substantial workflow change. Using your own AI avatar and reviewing generated videos make it require little trust to start - it just needs to work well. Once this new approach takes hold, it will disrupt those currently recording and producing such videos - and Synthesia is aiming at Hollywood too.

Chat-based web search is another large-scale example of this quadrant. Google’s command of searching users makes website owners invest in its indexing and pay to be promoted, so they can get users to their site. This gives Google unique data so it can serve users with better ranking. It feels impossible to unseat. Except…

If search becomes chat based, as pursued by Perplexity and SearchGPT, the power shifts. Suddenly, the ability to fuse and summarize content becomes the key, alongside a new chat-based user experience. The advertising model needs a deep reevaluation, and relationships with content providers is no longer about routing users to their site. If this new workflow becomes adopted, you can suddenly

imagine Google being disrupted.

This quadrant is the hardest one to map. It contains changes that require imagining a new way of working (which is already hard to do), but doesn’t require high trust. In practice, most solutions in this quadrant quickly migrate to the top right quadrant - a reimagined future.

AI-Native Future: Reimagine and Lead

At the top right sits our destination - the future. This quadrant holds solutions assuming users have both adapted to new, AI-native, workflows, and have built up trust that the tools will get it right. Representatives of this quadrant often feel like sci-fi, as it’s hard to say how far into the future we should be looking. Sometimes, you can imagine a change happening in 3-5 years. Other times, macro changes are required, and we may be talking decades.

Let’s take AI corporate lawyers as an example. Highlighting risky clauses to a lawyer is a bottom-left quadrant productivity booster, while outsourcing legal negotiation to an AI agent requires substantial trust. Once such AI lawyers get trust and adoption, change will start. AI’s will start negotiating with each other, reaching conclusions almost instantly and with far less cost. The skills needed to be a great corporate lawyer will change, and new law firms may employ more developers and data scientists than lawyers.

If that happens, how would our use of legal change? Would ease and cost have us establish contracts more often? Would AI-created claims overwhelm courts and judges, requiring them to use AI to cope? And how would we deal with the risk and cost of flawed legal advice? Startups with a strong vision can aim to define this future reality, lobbying and building solutions to steer markets and society towards them. They can disrupt the incumbents, and set themselves up to lead the new reality.

Similar evolutions can be applied to many domains. If self-driving cars become the norm, urban planning, traffic laws and car insurance will drastically change. If music becomes AI-generated, static songs may become dynamic, collaboratively created with the listener - a new kind of active listening. If AI therapists become accepted, therapy would be more accessible, and perhaps we tackle society’s growing anxiety. These destinations are wild, scary to some and exciting to others, and they all present opportunities to shape and lead the destination.

What about Tessl?

I’ll use Tessl as the last example of the Future quadrant. Bottom-left coding assistants will eventually evolve into top-left autonomous engineers, producing code at a pace requiring AI maintenance to keep up. And yet, code remains the true representation of an application, and the center of software development - is that really the desired destination?

We believe the true future comes when we detach ourselves from the code. When we allow users to specify what they want, and have AI handle the how. This will let us create an iPhone or Android version from the same spec, optimize implementations to every org’s surroundings, and have software that is autonomously maintained. Furthermore, it will enable anyone to create software, without needing to know how to code and practically instantly.

Such AI-Native software development won’t be done with current tooling. Writing, editing, testing, evolving and distributing specs and adaptive implementations requires a new software factory - which is what we’re building. Embracing a new paradigm requires substantial change and a fair bit of trust, so it won’t happen overnight. However, the move from a code-centric to a spec-centric software development paradigm offers an opportunity to reimagine the software development world - and lead it.

Journeying through the quadrants

In practice, companies and solutions will journey through these quadrants. As a builder, investor and consumer in new AI tools, I try to understand where they play today and how they intend to move through the dimensions.

Here’s my high level guideline:

If you build purely for the top right today, you won’t get users, as change and trust develop slowly. However, if you focus only on the bottom left, you will face fierce competition, and risk becoming irrelevant as the world embraces new ways of working. A startup’s vision should always anchor in the future (to be relevant tomorrow), but execution should start from the bottom left (to get adoption today), and chart a journey to the top right.

That said, many journeys can be viable, even within the same industry. Tesla started in Adoption with Autopilot driver assistance, and is moving into Autonomy with Robotaxis, while Waymo started there right away, focusing on IP and relying on partnerships to get adoption. Synthesia started from the bottom right (Change), using attended and short videos, and will require more trust as they produce bigger pieces for consumer media. Isomorphic Labs seems to be building directly for the Future, but offering easier to adopt research helpers that fit somewhere in the bottom half.

When I look at building, investing in or even consuming a solution where AI is the core, I find it helpful to understand where they place on this map, and chart their potential journeys. It often clarifies what strengths are needed in the long run, who would be future competitors, and what GTM strategy is right.

At Tessl, we’re clearly anchored on the top right. As to what our journey there would look like… stay tuned, and you’ll find out ;)