If you’ve ever found yourself pleading with an LLM to adhere to a constraint — using all caps, repeating lines n times, using words like “seriously” and “please” and “I will lose my job if you don’t return pure JSON” — then you’re guilty of begging, to borrow a term from our friend John Berryman. Begging is a bit of a smell. It’s a hint that your prompt might not be structured in a way that gives the LLM the best chance of completing the task.

Quit begging!

Of course, many of us have used begging patterns because we observe that they improve our results. Formatting, emphasis and repetition all matter in prompting. But you may be missing something more fundamental in your prompt’s structure.

Are you giving the LLM a riddle when you should be giving it an instruction?

What is Task Framing?

I want to share a technique I’ve been calling task framing, which is a way of writing prompts that bakes in certain expectations and constraints into the task definition itself, rather than adding them as after-thoughts at the end. I want to explain what exactly I mean, and why this works so well.

Let’s take a (perhaps overly) simple example. I want some text about Leonardo da Vinci. It should be very information dense, but not too long. Here’s my prompt:

Write an essay about Leonardo da Vinci, and how he contributed not

only to arts and mechanical innovations, but also advanced science

and anatomy. Include details about his works, their relevance in

modern society, his personal life, and his teachings and

contemporaries. Where relevant, include specific examples and

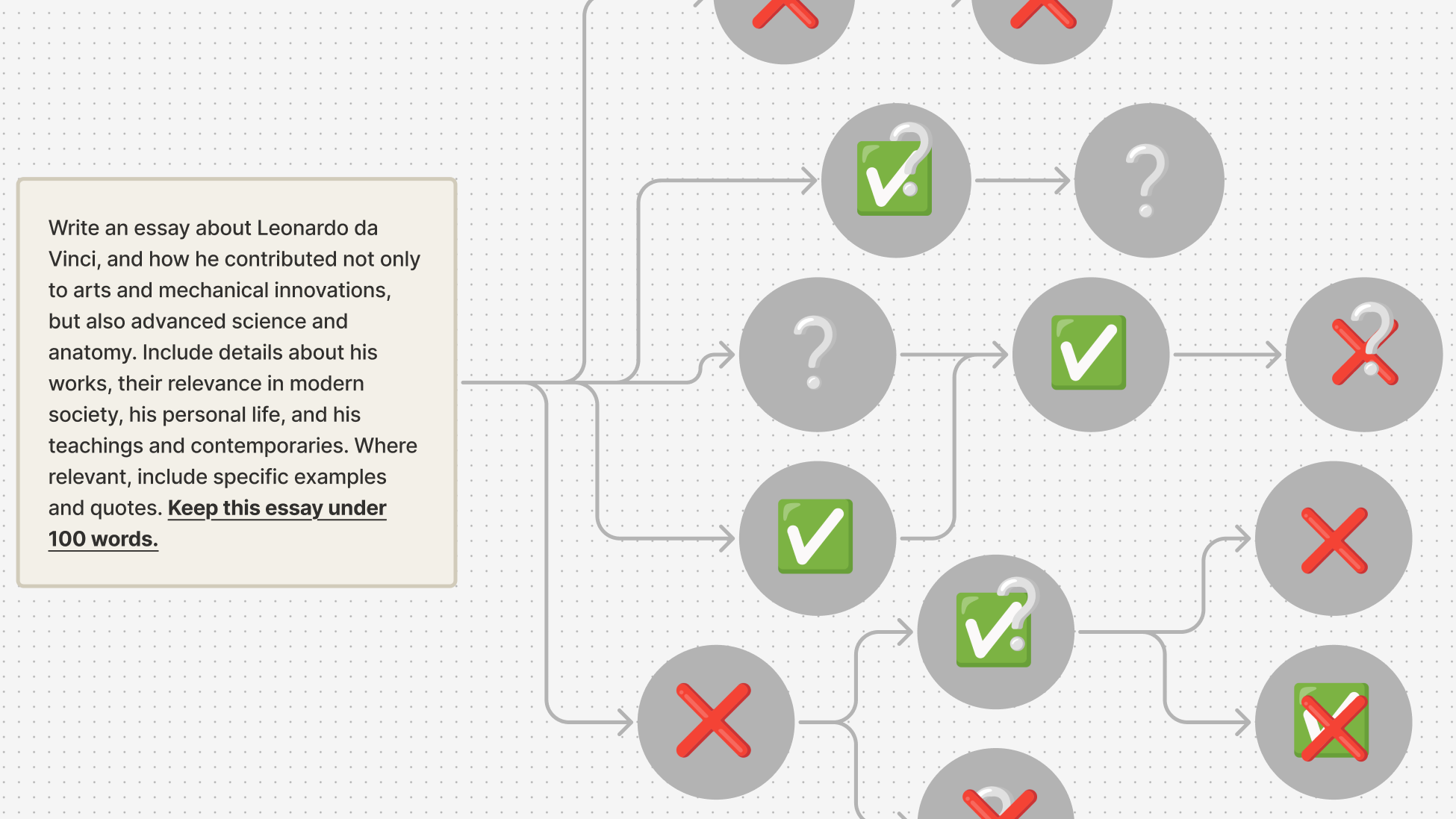

quotes. Keep this essay under 100 words.Remember, LLMs are prediction machines. They process your input sequentially, generating and weighting possible solutions before they’ve even finished reading the instructions (relatable). In this example, we’ve spent the first 90% of our prompt describing a task which is easily accomplished without the constraint. This has material consequences: the LLM will immediately find possible solutions, and its best ideas are unlikely to result in a <100 word essay.

The introduction of the constraint at the end massively narrows the scope of good solutions. Rather than discard the work it has already done, the LLM will re-weight its internal probabilities to adapt to the new information, and compromise between its initial path, and the one it is now being instructed to follow. This is a recipe for constraint violation — in this case, exceeding the word count — which may have happened even before the constraint was processed.

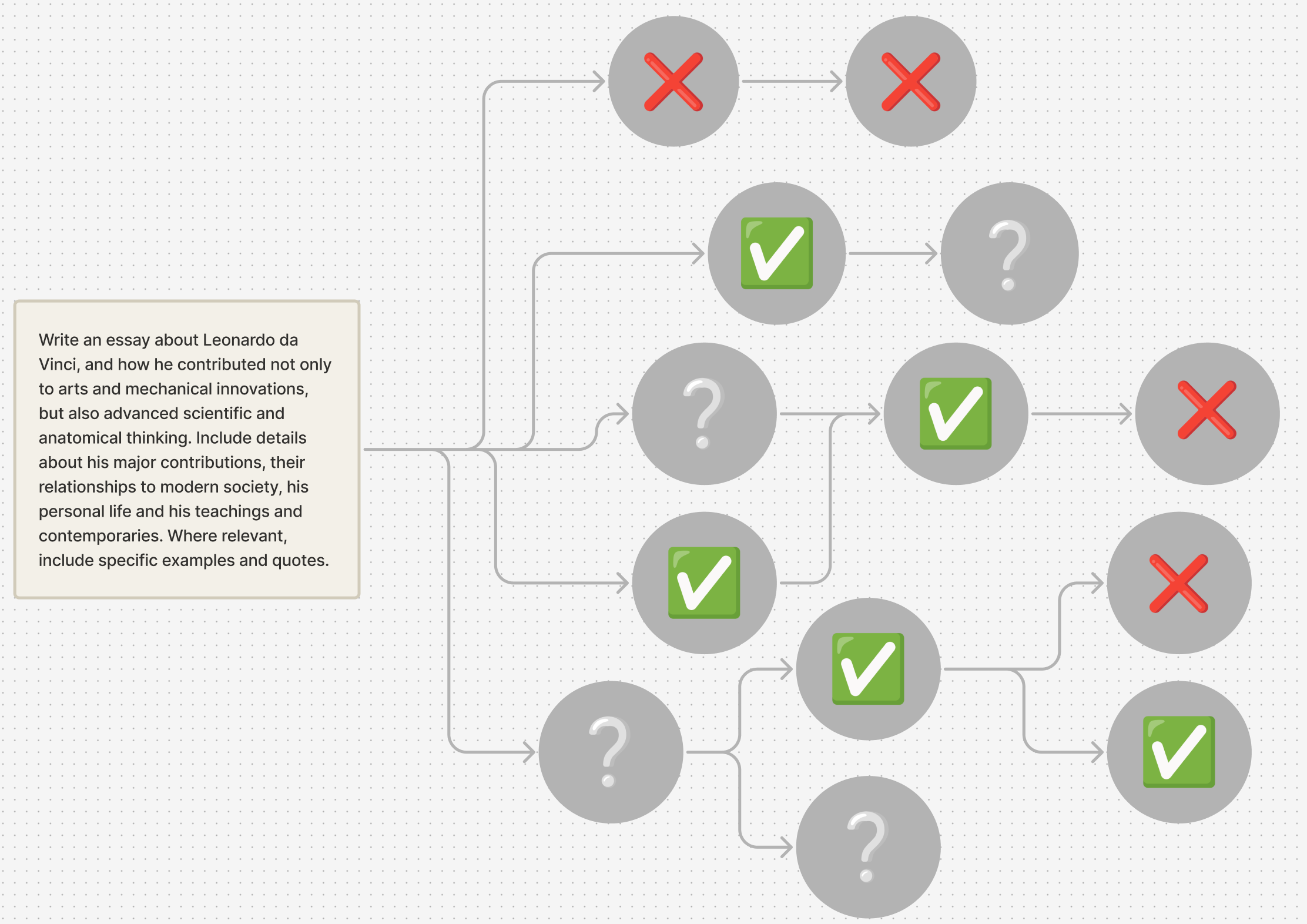

A way to re-write this prompt by using task framing might be:

Write a concise, 100-word blurb about Leonardo da Vinci, focusing

on highlights of his major contributions to art, mechanical

innovactions, science and anatomy. Briefly touch on key works,

their relevance today, his personal life, teachings and

contemporaries. Use specific examples and quotes where relevant.Here, we’ve transformed the 100-word constraint to be more central to the definition of the task. It’s called out from the very start, helping our LLM choose a better solution earlier, avoiding heavy compromise, and reducing the chances that it picks a strategy that would violate our rules. We reinforce this need by using words like ‘blurb’, ‘highlights’ — all confirming that we need a succinct piece of text.

Constraint Ordering vs. Task Framing

Although most examples of good task framing will place “constraints” towards the beginning of the prompt, there is a nuanced difference here. Consider these two prompt openers:

Use only the standard library.

Write a CSV Parser function which (...)Constraint Ordering

Write a pure, self-contained CSV Parsing function using only built-in

operations (...)Task Framing

In the constraint ordering example, we’re treating the constraint as a separate rule to follow. The LLM will start placing bets and activating patterns immediately, and Use only the standard library is a nebulous way to start. To get un-scientific for a moment, it feels dissonant with the rest of the request. Imagine receiving the instruction: “Spend only $20.” …Okay? On what..? (As an aside: I find this to be a helpful hint when evaluating a prompt. If it sounds awkward and unnatural to say out loud, you may want to re-word something.)

Functionally, placing constraints towards the beginning of your prompt is almost always preferable to placing them at the end, but we can still do better. Consider the task framing example. Not only does this better represent the type of task the LLM has likely been heavily trained on, but our constraint is no longer an extraneous rule. Instead, it’s inherent to the characteristics of the task.

While these examples illustrate the core concept, task framing becomes especially powerful in complex workflows, where you might encounter:

- Competing objectives

- A large, crowded context

- The LLM having to “remember” state between multiple exchanges or steps

- A “conflicting” constraint, where the task is easily solved without it

Recently, we’ve had success using this technique (among others) to eliminate the use of external dependencies in our code generation. Describing a coding task — let’s take the CSV parser from our earlier example — requires us to detail a problem that is easily solved by using an external dependency (or two). Not only is this a good solution, but LLMs have been trained on many such examples, so tackling this problem was a bit of an uphill battle. Re-framing the task to bake in our restrictive expectations from the beginning did the trick.

Take aways

Next time your LLM strays from its task, instead of reaching for caps lock, consider:

- What is the goal of my task?

- What natural language patterns would I use to convey the intent of the task to a human?

- How can I make my constraints (or rules) a core part of the fabric of the task?

By treating constraints as fundamental characteristics rather than afterthought rules, you'll build more reliable, maintainable prompts. No need to beg in production!

Join Our Newsletter

Be the first to hear about events, news and product updates from AI Native Dev.